The web is a complex beast. There are many moving parts involved in delivering a complete web application today. For a significant portion of my career, I have focused primarily on the architecture and implementation of the parts that an end-user never sees. Racks, servers, databases, switches, routers and load-balancers; the list goes on, but you get the point. The goal of such an architecture, of course, is to receive a user’s HTTP request and construct and return a complete result as quickly as possible. To say that there are “a lot of moving parts” in today’s web architectures is an understatement — they are beasts.

What makes this even more complicated is that once you spew forth the result to the end-user you have a daunting set of user-perceptible performance issues remaining to be addressed. This performance challenge happens in a hostile environment: one we do not control (the user’s computer) over a long-haul network we do not control driven via a browser we did not select — a daunting challenge indeed.

We are very fortunate to have excellent tools at our disposal with which to tackle this challenge. Two of my favorites are Yahoo’s YSlow! and Google’s Page Speed tools. Both are extensions to the most excellent FireBug add-on for Mozilla’s FireFox web browser. Both tools will help you dissect the various aspects of the content you deliver to end-users and understand how each bit will contribute to perceived slowness. In the web (and most other things in life) perception is king. A user’s perception drives their response.

Irony: the not-so-delicious kind.

I recently attended the Velocity conference and the first workshop I attended was Steve Souders’ excellent presentation on Website Performance Analysis. Steve Souders is the original author of YSlow! which I use on a daily basis. Steve used YSlow! to show how to analyze website performance (as one might have assumed from his workshop title). I popped open YSlow! on our corporate website and... horror!

While OmniTI has enormous breadth in the Internet space, we are primarily known as an Internet performance and scalability company. This made the fact that we received an F on YSlow! all the more embarrassing. This was a case of the right hand not knowing what the left hand was doing — something we evangelize against. I decided that I would fix that and aim to do it by the end of Steve's presentation. A play-by-play follows.

No Expires Headers.

It turns out that our images, javascript, and CSS didn’t have expires headers. Our CSS is in a directory /c/, our javascript is located in /js/, and all our images are in /i/. I could do this by content type, but a location-based approach gives me the flexibility of serving dynamic/uncacheable content with those content types if I choose to later:

<Directory "/www/sites/omniti.com/www/i">

ExpiresActive On

ExpiresDefault "access plus 1 month"

</Directory>

<Directory "/www/sites/omniti.com/www/c">

ExpiresActive On

ExpiresDefault "access plus 1 month"

</Directory>

<Directory "/www/sites/omniti.com/www/js">

ExpiresActive On

ExpiresDefault "access plus 1 month"

</Directory>

Using Etags.

Etags are on. This isn’t really a problem in and of itself, but since some of our static content can be served by multiple machines and the Etag in Apache is based off inode, it will be different from machine to machine and cause issues:

<FilesMatch "\.(js|css|gif|png|jpe?g)$"> FileETag None </FilesMatch>

Uncompressed content.

This is even easier. We run Apache 2.2, so:

AddOutputFilterByType DEFLATE \

text/html text/plain text/xml \

application/javascript text/css

No CDN.

We have a fast CDN-like caching layer residing at s.omniti.net that we can leverage... so I flipped all the images over to that. Technically, this is cheating because you have to add s.omniti.net to the YSlow! configuration to be recognized as a CDN. I was pleased to learn that even without formally moving the images to a known CDN, we still moved to an A rating in YSlow!

Assets served from a domain with cookies.

The move of all static assets to s.omniti.net resolved this issue. This goes to show that even if you don’t have a CDN, simply putting your static assets in a different domain (that has no cookies) can considerably speed performance in two ways: (1) it allows for more concurrency on the network layer and (2) it reduces the upstream payload for quicker requests.

The result?

A noticeably faster web site in under 45 minutes.

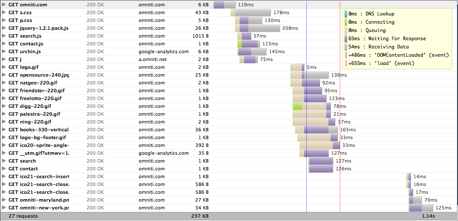

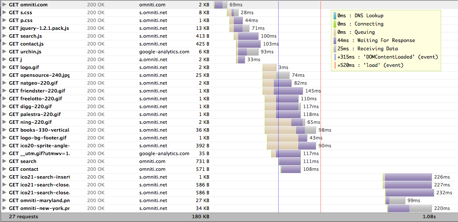

Before I fixed things up, it took 486ms to render (over the conference Internet connection).

After, a bit of work, I was able to drop the time-to-render to 315ms over the same link. That's a 35% reduction and it almost drops the page load time down into the “so fast it doesn’t matter” arena.

There are several things I’d like to do that would further improve page load/render times. The javascript used could be consolidated into a single js file (aside from the web analytics parts). The CSS could also be consolidated from two files to one. On our about page we have thumbnail photos of all our staff, they are all the same size and we could easily turn this into a single image and use CSS sprites; that would dramatically improve the perceived performance of that page.

Some things we did right? Our search is wicked fast as we pull the results in AJAX and make a single DOM manipulation to visualize them.

Next steps.

Go fix your site. Make it faster. Make the web a better place. It took me 45 minutes to make significant positive impact. Granted, if I didn’t know your web application or it was more complicated than our corporate site (which I believe all are), it will take a bit longer. It’s worth it. Do it. Or hire us to do it.