We’d all like to spend as little money as possible to get the performance we desire from our computing hardware. When the term “commodity” is used in relation to computing, it typically refers to products that are mass-produced and widely available, with little to distinguish them other than price. This is in contrast to “enterprise” hardware — specialized, vertically-integrated product lines such as Sun SPARC and IBM POWER that target a narrower slice of the computing market and differentiate themselves much more on features than on price. When I say “commodity”, I don’t simply mean standardized hardware, I mean the cheapest, lowest-common-denominator gear that gets the job done. There are legitimate use cases for both types of hardware, but as with all computing solutions, there are tradeoffs that must be understood to make the wisest choices.

The choice to design an architecture with commodity hardware in mind comes with some enticing benefits. First, instead of one expensive widget, I can afford a bunch of cheaper widgets and spread out my work load among them, which also helps isolate failures and improves the overall continuity of service to my customers. Second, it allows me to scale my solution as the demands of the business grow. Third, money saved by avoiding pricey hardware is freed to be spent in other areas.

Data storage is one market where there is a stark difference between enterprise and commodity hardware. The first time I heard the term RAID, I learned that the “I” stood for “Inexpensive”. Later, I discovered that it is often given as “Independent”. Both make sense in context, but it seems now that the former meaning has been lost when 15K-rpm drives are de rigueur and sit at the top end of the price range. Lowly 7200-rpm or even 5400-rpm SATA drives occupy the low end. This is but one area of computing where commodity drives is not seen as capable of matching more expensive, enterprise drives. However, a holistic systems approach reveals that there are plenty of places where commodity drives makes sense in terms of delivering on business goals and ensuring a high quality of service.

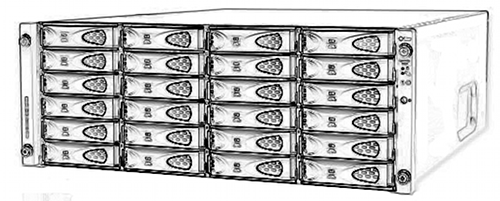

At the high end, dedicated storage arrays with custom hardware controllers filled with 15K-rpm drives define enterprise storage. These are speed demons; the high spindle speed means low latency (around 2ms, compared to 4-5ms for 7200 rpm drives). To drive latency even lower, some arrays use a technique called “short-stroking,” which utilizes only the innermost area of each disk platter, minimizing the distance that the heads must move. Such luxury comes with a steep price. Short-stroking reduces the usable space of each drive, requiring more drives for a given amount of storage. Not only that, but 15K drives max out at 300GB, so obtaining the kind of storage sizes required by large enterprises requires entire cabinets full of disk shelves. 15K drives are power-hungry, hot-running monsters which, at a time when electricity and carbon footprint concerns are becoming increasingly important, means that the luxury of high performance is ever more expensive, outstripping the performance gains of adding more spindles.

By contrast, a commodity storage approach seeks to maximize storage space and minimize costs, both initial and ongoing. The same budget can buy more spindles to keep latencies down and IOPS up. These additional spindles can fit within the same (or less) space and power budget as well.

The commodity storage picture is not all rosy. Enterprise drives are manufactured to withstand the higher level of vibration that comes with putting a lot of drives into the same chassis. They also have lower bit-error rates and higher MTBF than their commodity cousins. Simply put, commodity drives fail more often. “Failure” could be anything from silent data corruption to outright mechanical malfunction. Commodity drives also don’t spin as fast — 7200 rpm at most. That increases latencies, especially on random read loads. Any solution that employs commodity drives must account for these realities and work around them.

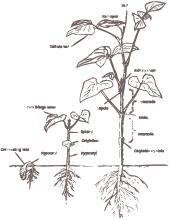

Thanks to some excellent, disruptive technology from Sun, namely ZFS, I can design a system around inexpensive, 7200 rpm drives, bolstered by a few SSDs, which provides more capacity with fewer spindles and improves read/write latencies far beyond the capabilities of short-stroked 15K drives. ZFS features such as end-to-end checksums, guaranteed on-disk consistency, intelligent prefetch, and immense scalability make it a good fit for my motley array of cheap disks. If I run low on space, adding more disks is extremely simple and cost-effective. Likewise, features like self-healing data and top-down, metadata-driven resilvering mean that silent data corruption and device failures don't have to be the ulcer-inducing events they once were.

Storage is just one example of how some careful thought during the design process can yield significant savings during implementation. The same theory applies to entire server farms when deploying web applications. An application that is designed to scale horizontally can be run on a large number of cheap servers rather than a few very expensive ones.

Sometimes it doesn’t make sense to go the commodity route. It is as important to know when you can scale horizontally as it is to know when you should not. An application may require more engineering to rearchitect it to scale out than it would cost to buy a larger single machine to scale it up. I'm not just talking about high-end RISC gear either — some relatively large x86-64 configurations are possible. For example, the upper end of IBM’s System x line can be configured with up to 4 4U chassis linked together in an 8-socket, 48-core, 128-DIMM system. That’s a monster box (cue the Tim Allen grunts). If you can run your app today on one machine, and you can plan its growth to fit into a monster box, then the cost-effective approach may just be to use the larger machine.