"OMG, Facebook is DOWN!" That was the cry from millions when Facebook was unavailable for about three hours because of network issues. Given the nature of Facebook's service, the downtime did not have any long-lasting effects on its user base. In fact, some say that the productivity significantly increased during the three-hour window without access to Facebook. The bottom line is: the unavailability of the social networking service doesn't negatively impact its users (ego and reputation of the service aside). Does this also hold true for the companies leveraging Facebook, or other social networks, like Twitter, Flickr, FourSquare in their daily operations?

Today, more and more companies operating online businesses try to break into the social media realm by leveraging existing services to increase visibility and loyalty to their brand and bring more people to their sites (and consequently, increase the conversions, visits, purchases or participation). I've seen many incarnations of social networking implementations, from the basic, simplified authentication with Facebook Connect augmenting the regular process (for ease of registration/login), to full-blown applications relying heavily upon multiple features available from these services' APIs. Now, personally, I am all for having these services available and used strategically throughout the applications. It provides a tremendous benefit not only in brand familiarity and content, but also in cost saving -- you're leveraging years of someone else's work for your gain. Consider Flickr. The storage, CDN and REST APIs to present the assets have all been developed and tested for you by a number of smart engineers for a number of years; all you need to do is to integrate the functionality within the content of your site. The same services are available to everyone, and you make the business decision about which features would be beneficial to your company's strategy. The implementation of the features, however, varies significantly.

One of the major risks when implementing a third-party service is the reliance upon the availability of that service -- one that you have no control over. And, no matter how large or successful that service is, it will go down at one point or another. Twitter, as an example, is well-known for intermittent service degradation, often followed by noticeable outages. Now, imagine what happens during the Twitter downtime if your site's content heavily relies only on Twitter API.

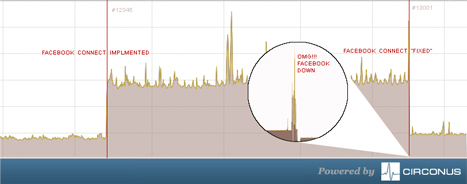

Let's examine a situation where a large online media company decided to switch to Facebook Connect as the exclusive authentication method for their site. (To prevent the discussion about the viability of this choice, let me just note that there were legitimate business reasons for choosing this approach). This is where the fun starts. The graph below represents HTTP load time for the pages on the site at every stage of the process. Even without the captions on the graph, everyone should be able to pin-point the exact time when the new code was deployed, and the load time of the pages tripled. The project owners were notified, but since the load times were extremely low to begin with (thank you, properly implemented caching) the load speed was deemed acceptable, and the changes remained in production. Time passed. And then some more time passed. And then the dark day came - the day when Facebook went down. And the page load times on the media site tripled again, for a very brief period of time (while Facebook servers were just lagging), and then dropped to 0, i.e. "users are unable to see the site." Just like that-Facebook's problem became the company's problem.

Upon closer code investigation the problem was identified and resolved quickly, also reducing the page load time to it's original threshold as a byproduct of the change, but it shows how dependent your site can become upon third-party service availability if the features are not implemented correctly.

How can these issues be avoided? There are a few common sense rules that, for some reason, are often ignored during development, which should help with the integration of external services without affecting your site's performance.

- Only connect to a third-party service where needed.

Don't try to connect to Facebook on every page load to validate that the user is still the user to whom you displayed the previous page. Cache the results locally.

- Don't make connections to a third-party service in the critical path of the page load.

Don't load Google Analytics as the first thing on your page, you will delay the display of the content that actually matters. Make the connections after your content is loaded, or better yet, connect asynchronously.

- Trap time-outs and errors.

You do it with your database connections, why would you treat external connections differently?

- Create a fallback plan.

You have no control over external services, but you do have control over the content presented to your users. If Flickr feed is the essential feature of your site -- store the displayed history locally, so you can fall back to the latest available content in case Flickr is unavailable. Remember, sometimes stale content is better than no content at all.

To make a blanket statement -- don't jump into using social media features without identifying a need for them and use them to support your primary business model. At the end of the day, when integrating any third party service, you are trying to leverage the benefits of the available functionality to enliven the experience for your own users, not to inherit the services' availability problems. Integrate smartly, not blindly.