Transactions are Great

Transactions are a wonderful tool for web/application developers. If several things in the database need to be modified together, simply wrap them in a transaction. This will guarantee that either all of the changes succeed together, or they all fail together. This helps to remove complicated error conditions of partially successful updates and avoid being stuck in a situation that may no longer possible for the application to clean up.

The following is an example of a simple web transaction in Perl.*

# Turn off AutoCommit so we're in a transaction

local $dbh->{AutoCommit} = 0;

eval {

my $sth = $dbh->prepare('insert into users (name, email)

values (?,?) returning userid');

$sth->execute( $name, $email );

$sth->fetch( \$userid );

$sth = $dbh->prepare('insert into addresses (...)

values (?,?,?,?,?) returning addressid');

$sth->execute( $userid, $addr, $city, $state, $zip );

$sth->fetch( \$addressid );

$sth = $dbh->prepare('insert into user_history (...)

values (?,?,?) returning historyid');

$sth->execute( $userid, $addressid, 'User Created’ );

$sth->fetch( \$userid );

};

if($@) {

$dbh->rollback();

} else {

$dbh->commit();

}

# Return AutoCommit back on

$dbh->{AutoCommit} = 1;

The user’s data is inserted into all three tables that this business requires. Without the transaction, it would be possible for the first two statements to execute successfully but have the third fail leaving the user in an incomplete state! Thankfully a transaction provides a guarantee that all statements will succeed or get rolled back to how they were initially.

* Careful readers may have noticed this example isn’t handling errors properly.

Transactions are Hard

While providing great functionality, as transactions become more complex, mistakes are increasingly likely to be made. Error handling is a difficult topic and it is easy for developers to make simple mistakes that can have unpleasant side effects. Modifying the earlier example, a number of common errors that find their way into transactional code, can be illustrated.

Breaking “autocommit” state

In Perl, the DBI module regulates transactions with the “AutoCommit” flag. Normally AutoCommit is set to true, and each query is implicitly wrapped in a “BEGIN;

# Turn off AutoCommit so we're in a transaction

$dbh->{AutoCommit} = 0;

If anything unexpected happened, that value would never get reset. This changes that database handle for all future database queries that use it and can cause very long transactions, or unexpectedly large volumes of SQL queries to get rolled back when an error does happen. It’s always important to maintain the state of the AutoCommit flag, and return it back to its previous state.

Including outside operations in a transaction

Especially in larger operations, a developer may not even realize they are in a transaction and put other calls into the execution path. These calls are not part of the transaction, and will not get rolled back in the case of an error. Consider the following change:

eval {

my $sth = $dbh->prepare('insert into users (name, email)

values (?,?) returning userid');

$sth->execute( $name, $email );

$sth->fetch( \$userid );

$PARTNER->API->acknowledge_signup( $userid );

$sth = $dbh->prepare('insert into addresses (...)

values (?,?,?,?,?) returning addressid');

$sth->execute( $userid, $addr, $city, $state, $zip );

$sth->fetch( \$addressid );

};

If an error occurs during the second execute() statement, the first insert will be rolled back. However the Partner has already been notified of the acknowledgement via its API. This API call will not get rolled back causing the Partner to have an acknowledgement of something that never occurred. These acknowledgements should not occur until after the transaction has been successfully committed.

Slowing down other transactions

Updating a value in an MVCC database such as PostgreSQL causes a lock to be taken. Other clients may also want to update that value or table, but until the first transaction is committed or rolled back, the database cannot handle their request. These other clients will have to wait until that lock is released. It is not uncommon to see a thoughtless and expensive operation put in the middle of a transaction block causing other clients to wait needlessly. The API call in the previous example could have a network issue causing the transaction to block until its timeout is reached.

Error handling can have errors

It’s quite difficult to anticipate all of the ways errors can occur. Consider this piece of code:

eval {

my $sth = $dbh->prepare('insert into users (name, email)

values (?,?) returning userid');

$sth->execute( $name, $email );

$sth->fetch( \$userid );

$sth = $dbh->prepare('insert into addresses (...)

values (?,?,?,?,?) returning addressid');

$sth->execute( $userid, $addr, $city, $state, $zip );

$sth->fetch( \$addressid );

};

if($@) {

$dbh->rollback();

}

$response->send_success();

$dbh->commit();

Here the code does not take into consideration that successful completion of the eval does not mean that the transaction has completed. The commit command itself can return an error if the database connection was broken. In this case it is possible to have sent a success message, but the transaction would still get rolled back by the database.

While only a few are reviewed here, there are many ways that transactions can be done incorrectly. While there are solutions to all of these problems, it would be great for developers if there was a way to avoid most of the issues in the first place by not using transactions, yet still atomically doing the changes.

Enter Writeable CTEs

Common Table Expressions (CTEs) can be thought of like temporary tables that exist only for the life of that query. These are created by using the WITH keyword and are supported by most major databases. These can be quite powerful on their own and I’ve written about the recursive versions of these before. A simple example of a CTE would be:

with naughty_users as (

select * from users where banned = 1

)

select * from naughty_users;

Writeable CTEs were added to PostgreSQL in version 9.1. These took CTEs and super charged them by allowing the modification of data inside of these expressions. For example, inserting, updating, and deleting. This allows many powerful expressions to be chained together in a manner not possible before. For example being able to delete a set of rows, while being able to insert a subset of those rows into a log table.

with deletions as (

delete from users where expired is true

returning *

)

insert into deleted_even_user_archive

select * from deletions where userid % 2 = 0;

It is an important side note that CTEs are “optimization fences” to the query planner. The query planner will not attempt to re-arrange queries across table expression for faster execution plans. For more advanced users, this can be used to force the query planner to use certain indexes. However for the novice, it means the query planner cannot try to optimize a poorly written CTE whereas it may have been able to if it was written in a more traditional manner.

Replacing Insert Transactions with CTEs

This example consists of three separate insert queries:

insert into users (name, email)

values (?,?) returning userid

insert into addresses (userid, address, city, state, zip)

values (?,?,?,?,?) returning addressid

insert into user_history (userid, addressid, action)

values (?,?,?) returning historyid

These three queries could instead be expressed as a single CTE query that returns the ids of all three inserted rows.

with userdata as (

insert into users (name, email) values (?,?)

returning userid

), addressdata as (

insert into addresses (userid, address, city, state, zip)

select userid,?,?,?,?

from userdata

returning addressid

), historydata as (

insert into user_history (userid, addressid, action)

select userid, addressid,?

from userdata, addressdata

returning historyid

)

select userid, addressid, historyid

from userdata, addressdata, historydata;

Doing this as a single query avoids the transaction issue completely. The entire query is executed and succeeds or fails atomically. If part of the query throws an error the entire query is rolled back, like can be expected of any other query. Wrapping these inserts into the single CTE query allows:

- avoiding complicated transaction code

- avoiding complicated error handling code

- reduced query overhead

- minimization of locks duration (because the transaction runs faster, see below)

Performance Impact

In theory, this should provide a significant amount of back-and-forth savings on the network by running all three queries at once instead of serially. But nothing beats real world metrics. To test this, a free Postgres instance on Amazon RDS was created with the following settings:

Free Tier Postgres RDS instance of Postgres 9.3.3 Multi-AZ Deployment : No db.t2.micro - 1 vCPU, 1GB RAM General Purpose SSD storage, 20 GB

A free t1.micro instance running OmniOS r151012 AMI was created to test with. To reproduce these results instructions and scripts are available at https://github.com/bdunavant/cte_demo

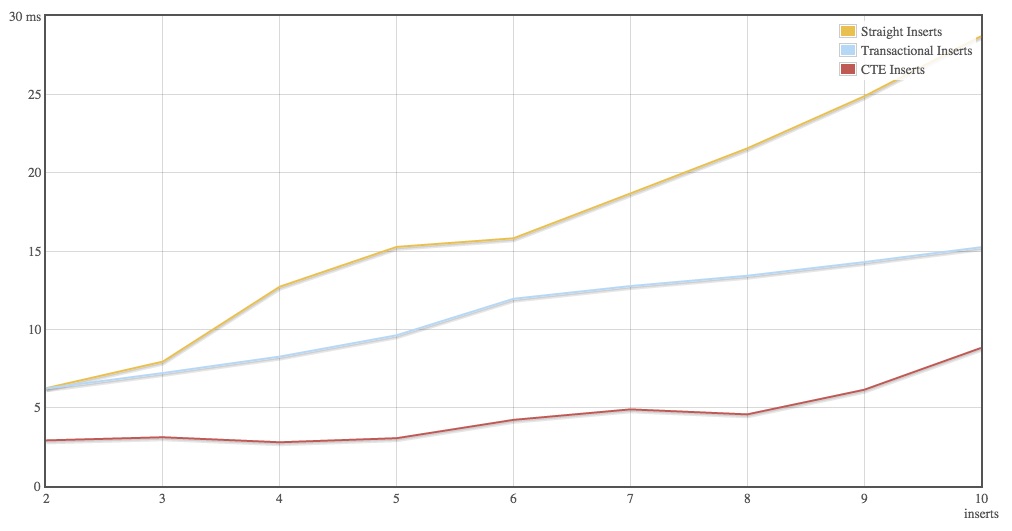

The ‘demo_web_inserts.pl’ script runs three different scenarios 100,000 times to get the average time spent for each set of inserts. The results were pretty close to expected.

Traditional without transaction took 758 seconds to run. 758 / 100000 = 7.58ms per set. Traditional with transaction took 737 seconds to run. 737 / 100000 = 7.37ms per set. CTE took 289 seconds to run. 289 / 100000 = 2.89ms per set.

It is interesting to note that the transaction version of the 3 inserts actually runs slightly faster than the non-transactional version. This has to do with Postgres not having to write the WAL logs to disk until the commit is issued. Less disk access means faster run times. The CTE version of the query gets to take advantage of this same fact since all three inserts are in the same commit.

Overall the CTE version of the inserts give an approximately 60% decrease in run time; which is to be expected if 1/3rd of the number of queries are being run.

To further explore the level of savings, a simple user insert is run for multiple iterations; 2 inserts vs CTE, 3 inserts vs CTE, and so on up til 10 inserts, to verify the savings scale upwards. This test is in demo_variable_inserts.pl. Each set is run with 100,000 iterations on the same setup as the previous test. Towards the end the test cases take significant time to complete (hours per iteration set). This is simply total time / iterations to determine an average (including outliers). EC2’s variable performance throws off the data but the trends appear pretty clearly.

Caveats

There’s no such thing as a free lunch. So there are reasons to not suddenly go in and update all of a project’s inserts statements to wrap them in CTEs.

- CTEs are often unsupported in ORMs requiring developers to step outside of the framework and supply the SQL themselves. This can break encapsulation in some cases or cause other side effects. Make sure the code doesn’t break by moving all of these queries together.

- CTE queries take some learning to write correctly, especially regarding this different method of returning the ids. Developers must fully understand how the SQL statements work. This is a tradeoff between less complex app code, for slightly more complex SQL statements.

- Postgres executes sub-statements and the main query concurrently making insert order unpredictable. This could have important implications to an application. In the tests, the insert order is forced by referencing the previous sub-statements.

- While this technique can technically also be done with update statement, updates have their own challenges (such as deadlocks) which are out of scope for this article.

Conclusion

Common Table Expressions are a great way to simplify some common database cases helping to reduce mistakes in transaction handling by avoiding transactions entirely. In addition they can improve performance out of a series of queries by merging them into a single query.